Successful integration of AI-run processes: potential challenges and solutions

A primer on overcoming three data governance and privacy issues within the payments industry

DISCLAIMER: Articles are written to reflect the interests and views of the author(s), and are not intended as an official Payments Canada statement or position.

Summary

As artificial intelligence (AI) revolutionizes the world, many industrial sectors require the safe and seamless implementation of new technologies in order to survive and keep up with fully automated times. The payments sector is no exception. AI has the potential to significantly improve the analysis and processing of transactions. It offers significant opportunities in a variety of areas, including customer service, regulatory compliance, payment processing, anti-money laundering, and fraud detection. However, these numerous use cases may introduce or exacerbate financial and non-financial risks, as well as raise concerns about data management, explainability issues (chiefly, decoding the transformation process of financial inputs into a decision output), ethics, data sensitivity and security, and regulatory and supervisory requirements, amongst others.

This paper gives an overview of the main risks and challenges that businesses and their customers may face when using AI technologies in the payments sector, as well as potential mitigation strategies with the aim of accelerating and smoothing AI adoption.

The AI revolution

Economic development is a dynamic, ongoing process that is heavily reliant on resource mobilization and innovation. The term "Industry 4.0" refers to the new and more profound transformation that is currently taking place in the world and is based on the integration of the most recent technological advancements, such as the Cyber-Physical System (CPS), the Internet of Things (IoT), cloud computing, artificial intelligence, and industrial IoT, into nearly every industry in the economy (Schwab, 2017; Islam et al., 2019). The concept of Industry 4.0 encompasses both the integration of value chain elements into the automation system and their mutual assimilation. As a result, machine learning and artificial intelligence are critical for achieving faster, more effective, and industrial transformation.

Artificial intelligence is already pervasive in our daily lives, from self-driving cars and drones to virtual assistants and investment and translation software. Recent years have seen impressive advancements in AI thanks to the exponential growth of computing power and the availability of vast amounts of data (Schwab, 2017). Many industrial sectors in the current era of change require the implementation of artificial intelligence in order to survive and keep up with fully automated times (Sardjono et al., 2021). AI has the potential to significantly improve the way we analyze and process transactions in the finance industry. As regulations become more complex and the fight against fraud intensifies, AI may become a critical component in unlocking a more efficient and secure global payment system (OECD, 2021).

AI applications in the payments industry

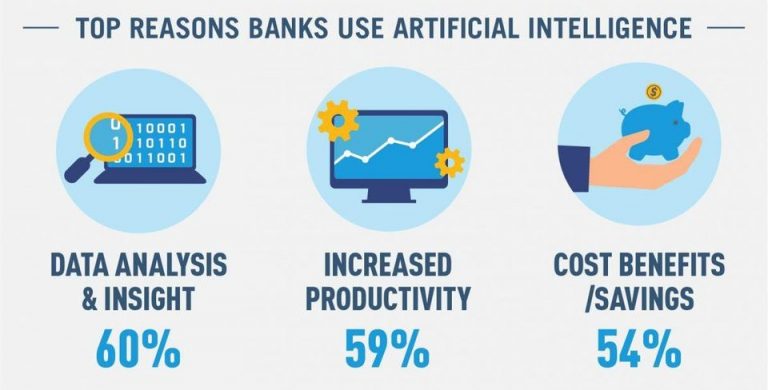

The financial sector is at the forefront of implementing new technologies to enhance the services it provides. The various artificial intelligence applications have served as the foundation for recent advancements in the financial and technical, or fintech, industry (IDC and Microsoft Canada, 2020). In the financial services and payments sectors, AI in particular offers significant opportunities in the following areas:

- Fraud detection/prevention through the identification of unusual transactions and staff behavior, such as logging into banking systems after hours or when the amount involved is very large, or the transaction was started by an unexpected party, or when the organization has never conducted business with the destination company or country that is receiving the payment. To this end, the Bank of Canada partnered with MindBridge to detect anomalies in large-scale payment transactions data and with startup PigeonLine to categorize sensitive data (Simpson, 2019);

- Payment processing, in particular its speed and efficiency, can be improved by reducing the amount of human involvement. AI tools, for example, enable direct payment processing, workflow automation, decision support, and image recognition in documents. Additionally, as speech recognition technology advances, banks are increasingly able to process voice-activated payments made using a smartphone or smart speaker;

- Know Your Customer (KYC) processes for onboarding new clients may involve using natural language processing to read through documents, analyze them, report their findings to decision makers, and even cross-reference the documents with external sources. As a result, both banks and their clients could benefit from a faster, safer, and more efficient onboarding process;

- Anti-money laundering and regulatory compliance can be achieved by using anomaly detection techniques to validate client transactions and detect patterns that indicate illegal activity. Despite the lack of evidence to support its widespread use, AI is likely to be used for this purpose in the future. Promisingly, Mastercard Services revamped its arm of AI-based payment transactions consulting – through its acquisition of Brighterion – that has been assisting financial institutions in meeting their anti-money laundering and compliance goals;

- Risk reduction through the prediction of customer credit card behavior and the maintenance of behavioral scoring based on data gathered from the customers' transaction history by banks/fintech companies/credit cards;

- Customer service is where AI is deployed most commonly given its simplicity and reduction in human interaction-related costs. Currently, chatbots—computer programs made to converse with people via voice or text—can answer different kinds of client questions and perform simple tasks like setting up or canceling standing orders or direct debits or providing personalized information on payments that a client is unsure about. Recently, TD Securities worked with IBM Watson to operationalize a chatbot available to customers to answer questions about precious metals (IBM, 2022);

- Digital ID has established itself as a less expensive, safer, and quicker alternative to conventional identification authentication processes for businesses, as was discussed in a previous edition of Payment Perspectives. It offers more affordable and improved payment security and can be applied as a fraud prevention measure;

- Legal document production and complex, industry-specific translations in real time, particularly for the legal and financial services sectors. Alexa Translations A.I. is an illustration of a cutting-edge machine translation platform in this space.

Is there any risk to using AI?

These myriad uses bring us to the main problem in this case: how do we keep AI-run processes successful and safe, especially when they have significant value or responsibility? Although Canada remains a leader in AI research through such prominent initiatives from organizations like the CIFAR (Canadian Institute for Advanced Research) and has a federal AI policy, there is no national government AI strategy for departments and agencies across federal, provincial, or municipal levels (Brandusescu, 2021). The lack of standards and methodologies slows AI adoption. Businesses face numerous challenges, including bias in data and algorithms, decision-making explainability issues, data sensitivity, cyber risks, employment risks, a lack of knowledge, regulatory considerations and potential incompatibility with existing regulatory requirements, and many others (Kurshan et al., 2021; OECD, 2021). Highlighting these challenges and determining how they fit into an analytical framework can help to mitigate their impact and accelerate AI adoption. In what follows, we highlight key issues for businesses and their customers, along with suggested mitigation strategies.

Challenge #1: Ethics and bias

The increasing use of AI processes and algorithms in the payments industry is assisting practitioners in modeling risk and vulnerabilities on-the-fly and optimizing decision-making, yet is confounded by risks associated with the use of sensitive data. Financial institutions inherently deal with large quantities of personal and financial data on their consumers alongside their own transaction processing and asset management data, which demands secure and fair analyses. Inadequately supervised use and faulty management of AI algorithms trained on unhygienic data can negatively impact the model results, and thereby be of disservice to the analysts using the findings for synthesis.

The eventual performance of AI algorithms depends largely on the training data used to develop the model and teach the forms of responses expected. Based on how they are deployed by financial institutions, such models have the potential to amplify the unchecked biases that are not treated or removed from the data or those of the model builders themselves. The use of insufficient data, or that which may be of poor quality and flawed, can perpetuate and strengthen biases and lead to discriminatory outcomes. Firms that infer attributes from other data points (such as gender using purchase history, or marital status using transaction patterns) are also at the risk of assuming beyond reasonable proof and can unintentionally further unfair practices.

Whereas greater availability of data may assist in building richer models, improper construction or training logic of AI models using this data may further the concealment of innate biases. The advent of new data sources to supplement existing information on customers gives financial institutions the upper hand in generating multidimensional algorithms to assess the creditworthiness of customers, yet it can exacerbate the challenge of isolating the causal factor when applicants are rejected based on the results of the model. This issue is most prominent among the larger lending institutions that increasingly rely on data from Big Tech like Meta (previously, Facebook), Google, Apple, and Amazon, and may point to market concentration in service provision, such as through the deployment of cloud computing or invasive behavioral tracking models (OECD, 2021).

Although AI models are being increasingly adopted by financial institutions for high-frequency trading and processing transactions, their improper usage may increase market volatility. Overuse of similar models by multiple firms that do not fully understand or cannot explain how the models were built or trained can create a situation that resembles herding at the algorithmic-level, which could decrease the liquidity within an interconnected system (OECD, 2021). Similar one-way market conditions may arise when models are trained with associated datasets that do not feature much differentiation in their produced strategies or when these unchecked models are allowed to conduct vast amounts of self-executed trades.

While data-led behavioral nudges can enable positive savings habits and help track finances efficiently, financial institutions can also use their counterpart ‘dark nudges’ to promote purely profitable outcomes as well. By automatically enabling autopay, to using countdown timers to stimulate hastier purchases, or breaking down everyday purchases into convenient installments with buy-now-pay-later modes of payment, they can lead to non-optimal spending decisions made by customers, who may feel compelled or even deceived into making a choice they would not have in the absence of this nudge mechanism. Therein, the role of AI shifts from making suggestions to influencing decisions (Stezycki, 2021).

Solution #1:

The primary step within the successful deployment of beneficial AI processes is to be cognizant that these are not fool-proof models and are prone to intricate challenges. Foremost remains the concern associated with the biases that seep into the data and may distort findings overall. Below, we propose some suggestions for financial institutions to address the ethical constraints surrounding these models and how to alleviate AI-led discrimination.

As AI models are deployed firm-wide across various stages for decision making, the ethical and discriminatory concerns should also be addressed across these multiple levels. Iterative review of models after each stage of development ensures that safety is embedded within the model building process and is not merely an afterthought. As such, firms trying to increase the transparency of their AI algorithms adopt a supervisory model management approach that restricts access to the model itself and the underlying data, inspective policies that monitor activities and outcomes, and frameworks to eliminate biases in collected and stored data (Brighterion, 2022).

The adoption of an overarching policy centered around the implementation of AI processes can assist in the alignment of compliance goals at the state- and firm-wide level. The lack of a defined and cohesive legal framework surrounding the use of AI in Canada increases friction in the coordination of technical and regulatory agencies, and can unbridle AI mismanagement within corporations operating in the country. At the institutional level, firms across Europe, Asia, and the United States are designating supervision responsibility specifically upon a technical officer or manager liable for conducting all the AI models. Internationally, implementing similar personal responsibility frameworks is becoming an increasingly visible method of critical compliance within the financial services sphere (Buckley et al., 2021). Akin to accounting audits, external and internal auditors periodically assigned to these firms can help assess and eliminate systemic risks introduced through the adoption of AI models. Instituting such human safeguards against systemic risks can control and prevent herding-like behavior in algorithmic trading (BIS, 2021). This safety system of a firm can be further enhanced with the supplementary usage of an AI auditing software or program that documents the underlying machinery and processes for necessary compliance and reporting requirements (Firth-Butterfield and Madzou, 2020).

The milieu and the knowledge that practitioners contribute to their respective firms can alleviate concerns stemming from the use of homogenous data (Lee et al., 2019). When the makers and users of the AI models possess domain expertise in finance to understand the inputs and outputs, they can combine this with their data science capabilities to accurately investigate and contextually interpret the models deployed. Practitioners who are able to synthesize their insights from both financial economics and machine learning are more likely to ascertain the factors impeding the model outcomes. When teams consist of modelers that hail from unique backgrounds, they can collectively address knowledge gaps and reduce the biases apparent in the data and those propagated by the AI algorithms deployed. Bias mitigation during model deployment can be further enhanced with the use of data that is sourced from diverse communities and is updated at short intervals to reflect current behaviors and patterns. Cultural inclusivity at various levels of modeling can pre-emptively address potential discriminatory AI outcomes.

Financial institutions maximize the benefits of AI when it expedites the analysts’ and consumers’ decision-making processes, and when they feel confident and empowered in finalizing their outcome. Customers and employees maximize their experience when the deployed AI model is able to explain the rationale behind the process, when it does not restrict their choices, and when subjects are able to challenge the results determined by the algorithm. Firms can further enable informed decision-making by presenting all applicable options without unduly restricting any offerings (UK Finance and KPMG, 2020). Similarly, in service to their clients, successful firms deploy financial incentive-based nudges solely in instances that increase their overall wellbeing (Moneythor, 2021).

Challenge #2: Data Management

Financial firms and government entities are at the forefront of cyber attacks that attempt to compromise sensitive data, interfere with currently deployed models, or inject malicious code into algorithms (‘data poisoning attacks’) to disrupt functioning and extort ransoms. Customers of these institutions frequently voice their concerns regarding data privacy and new methods of security. And, the data itself may be subject to quality concerns that could deteriorate model performance. As such, globally, firms have ramped up investments in data privacy methods and increased governance practices.

When the deployed models deal with information gathered from compromised sources, the implications of misguided outcomes can be grave. Biased data further produces biased results, and this problem is referred to as ‘garbage in, garbage out’ in machine learning academia (So, 2020). Using flawed data, financial institutions could potentially misjudge clients based on their ethnic background, gender, or other visible attributes, and thereby further depress the standing of that community and jeopardize their own reputation (Atkins and Luck, 2021).

The increase in regulatory best-practices has initiated a privacy-centric AI modeling approach that limits the use of personally identifiable information. Yet, a small subset of financial institutions still impute these omitted attributes using spending patterns and consumption behaviors, thereby making strong implications and assumptions that may not always hold true and can have negative consequences. Safe storage of this sensitive data is complexified by the onset of novel cybersecurity attacks that are hard to immediately detect and are potentially costly in their damage. Further, navigating this new landscape has led to a diversion of resources through the reprioritization of legal strategies and investment in reporting channels to meet compliance requirements.

Rising consumer awareness about the use of sensitive data in AI models has intensified the call for data control. Collectively, financial institutions store highly sensitive data on virtually every individual, which, if breached, has the potential to lead to theft, fraud, and other white-collar crimes. IBM Security reports that the cost of the average breach in 2021 was US$4.24 million (Hintze, 2021). As such, customers demand data privacy and protection from their institutions across their digital journeys. When transactional or financial data on individuals is sold to third-party firms, consumer trust or activity with the primary firm may be lowered.

Solution #2:

Firms that collect and store sensitive data also share the responsibility of safeguarding it and eliminating it based on compliance requests from whom it was collected or other regulatory agencies. Although cumbersome, managing data responsibly at every stage of modeling can preserve future reputation and legal costs. Establishing protocols and procedures for timely compliance reporting can minimize time and hassle later. Eventually, the role of data and modeling should be viewed as that which supports–and not substitutes–human decision-making.

Data protection at every stage of AI model development can reduce the total burden of privacy and security for financial institutions. Such firms benefit from instituting periodic audits of their AI models and training data (Mintz, 2020), designating governance responsibility upon a competent manager, and anonymizing protocols for data safety across multiple levels. Adopting an iterative AI model audit process that compares different models, manages data, and monitors model output and performance can heighten the safety and operability of currently deployed models (Somani, n.d.). As a security protocol, many data-dealing firms aggregate or bin sensitive financial and personal data on users before analyzing or sharing it. In addition, synthetic data may be constructed to generate potential risk scenarios and to evaluate model performance. This use of synthetic data over actual customer data can help address privacy issues, democratize data, and minimize bias and costs for financial institutions (Morgan, 2021; Linden, 2022).

Given the risks and costs associated with collecting and storing sensitive data, financial firms can benefit from data minimization. To tackle privacy-related challenges, data minimization in the context of personally identifiable and implied information is a good rule of thumb when training and testing models (OAG Norway et al., 2020). Issues with flawed data can be identified and targeted early-on when the data used for model development is sourced from diverse communities, and as are the developers themselves (Atkins and Luck, 2021; Rossi and Duigenan, 2021). Incorporating multiple and varied viewpoints across the model building process can help generate a more trustworthy and inclusive algorithm that is more likely to gain acceptance among the end-users.

Financial institutions can develop a stronger and more reliable relationship with their customers when they feel in control of their data and have a sense of agency. Firms adhering to richer data management among stakeholders offer informed consent in concise and accessible language (Atkins and Luck, 2021), greater data control tools, and restricted visibility of sensitive data. As such, the data collected by these institutions is expressly stated and collected after gaining documented consent in simple language. Further, this data is only exchanged with other parties after obtaining additional consent and establishing new data-sharing terms that do not breach any stakeholder’s trust (Shields, 2020). All customers of such firms have timely access to their data, the ability to erase any or all components, and privacy information regarding the data – such as whether it was anonymized or aggregated before analysis (UK Finance and KPMG, 2020).

Due to the costly and sensitive nature of the data collected by financial firms, international authorities are establishing their own mandates to safeguard consumer and human rights. In its comprehensive data governance mandate for the use of AI in the financial services sector, the Monetary Authority of Singapore (MAS) released guidelines to promote fairness, ethics, accountability, and transparency in the use of data that promote wider data access to customers of financial firms. For decisions made by AI-led processes, MAS advocates for channels through which the subjects of the decisions can inquire and appeal the outcomes, request additional reviews of decisions that directly affect them, and include proper authentication measures for the training data used (MAS, 2018). More recently, in establishing usage recommendations for all Americans, the Office of Science and Technology Policy (OSTP) of the United States’ White House issued the ‘AI Bill of Rights’ that encompasses data protection methods and standards for firms willing to incorporate safeguards, consumers initiating channels to inquire about their data, or workers advocating for ethical model deployment (OSTP, 2022).

When dealing with sensitive information to navigate critical decisions, leaving the outcome to an improperly supervised algorithm can lead to unexpected results for both the practitioners and the subjects. Keeping in line with the personal responsibility framework being adopted by firms across the globe, the role of logical human judgment, oversight and review should supersede model outcomes. Maintaining human intervention in the decision and design cycle and subsequent reviews can alleviate unusual findings that may isolate or confuse any stakeholders (BIS, 2021).

Challenge #3: Explainability and implementation

AI models use large amounts of data to produce predictions and classifications without simultaneously generating an explanation behind the results. Among practitioners, this is referred to as the ‘black-box approach’ to such algorithms. This may reduce the reliability of the system for a business and increase friction with end-users who may be expecting different outcomes (von Eschenbach, 2021). The opaque decision-making process of the algorithm can further exacerbate issues as it uses sensitive personal and financial data (Buckley et al., 2021). When processing such confidential data, it remains crucial to fully understand and explain how the model arrived at the result, which may reduce confusion and dismay expressed by customers unexpectedly restricted to few choices and practitioners who can return to the model building process and tweak the specific processes that lead to an incoherent finding.

The lack of model explainability for financial institutions may interfere with the reception of the outcome by the stakeholders and may even amplify systemic risks within an interconnected system. When the AI model outcomes vary vastly from the subjects’ expectations, they may widen the gap between the firm and the consumers. This challenge is further exacerbated when analysts may be unable to determine the factors behind the outcome or how the process generated that choice of outcomes for a specific user (Surkov et al., 2022). The lack of explainability of AI algorithms may impede on their overall acceptance and could make them appear less trustworthy (Grennan et al., 2022). When AI models are deployed without fully understanding the technical processes underneath, the algorithms could worsen market shocks and increase volatility within the system.

The explainability issue is intensified in the financial realm by a disconnect in technical knowledge between the modeling scientists and the end-decision making analysts (OECD, 2021). A gap in technical literacy among data scientists and business managers may misalign the firm’s goals when the demands of the managerial team are not juxtaposed with the AI process modeled by the technical team. Improper communication related to the modeling objectives may disrupt the outcomes and affect the operability of the AI-run processes.

Firms trying to protect their proprietary AI processes through the concealment of the model may further compound the explainability challenge. An increase in opacity to protect their own modeling advantage may deter practitioners’ ability to supervise and rectify any underlying issues. For financial firms’ customers, the enforced lack of transparency may erode their trust in the AI recommendation engine and their institution, dampen their ability to adjust their trading strategies, and potentially divert their reliance on non-algorithmic processes (Thomas, 2021).

Although there remains much excitement over the implementation of new technologies in this sector, many practitioners and consumers are still skeptical and unconvinced of the prowess of AI. For the same problem, an AI model can generate multiple possible outcomes, the accuracy of which may not be easy to compare or measure using established metrics and existing key performance indicators. In addition to the measurability problem, there is no accepted standard for accuracy, and the modeling process still necessitates some form of human intervention.

The adoption of new technologies could generate compatibility challenges when updating current infrastructure and regulatory frameworks around the new AI algorithms (Scanlon, 2021). Retrofitting AI processes in established systems may confound findings, create an additional layer of complexity for decision-makers, or even disrupt ongoing operations. Evolving firms may require supplemental technical support in porting over data from existing systems in the formats required to analyze them using the new AI models. Financial firms seeking to implement AI models within their operations invest substantial time and monetary resources in researching and hiring technically competent financial managers or external consultants to guide their decision-making.

Solution #3:

Given the relative novelty of implementing AI processes across the financial services workflow, their take-up and acceptability go hand-in-hand with how clear and approachable they are in their input-to-output conversion. Financial firms are subject to greater regulatory scrutiny given that they deal with inherently sensitive information, and as such, they require more tactile supervision. Human involvement at various levels of AI modeling can make this process more practitioner- and consumer-friendly.

Inverting the explainability challenge, financial firms are now adopting ‘white-box approaches’ that provide greater direction on the underlying input-to-output transformation process. The increase in adoption of explainable models can demonstrate how the outcomes were derived and how they will be used by the firm (Surkov et al., 2022). Explainable AI, or popularly XAI, includes modeling techniques that expressly label the inputs and outputs to map the trajectory taken to reach the outcome and can help shed light on their relationship. Domain expertise in finance and data science can bridge the explainability gap through the generation of replicable, trustworthy, and open-access models that associate creditworthiness-related reasons with algorithmic actions. Choosing the appropriate AI-run process for the desired modeling problem–based on in-depth understanding of the underlying business logic–yields significant improvement in the accuracy of the predictions. Practitioners with innate knowledge of the systems and financial contexts can weave the data with model outcomes using fact-backed storytelling that links inputs with outputs.

Compliance with new reporting and regulatory policies is driving the rapid adoption and safe implementation of AI processes among executive leadership in financial institutions. With a heightened focus on disclosure requirements, technologically-advanced firms are documenting the inner workings of their AI-powered models, which in turn spurs explainability. The use of publicly-accepted open-source models tends to nurture trust and comfort among all stakeholders involved. Agile firms periodically test and retrain their models for up-to-date predictive power. These healthy models are deployed over large synthetic datasets to capture unusual findings, locate non-linear relationships and tail-events, and stress-test with fabricated cases that are probable (OECD, 2021).

Despite the advances in machine learning and autonomous deployment of AI models, human judgment at all stages of deployment can act as the ultimate safeguard against spurious findings (BIS, 2021). Cautious firms collectively use ‘human and machine’ intelligence to inform decisions, wherein the outcomes of the AI process assist the human decision maker. This approach defers control and accountability upon specific individuals who are responsible for matching data with appropriate outcomes. Innate process knowledge and human intervention across all stages of model evaluation can reduce the chances of confounding factitious correlations with definitive and meaningful causal relationships (OECD, 2021). In these varied manners, humans can act as the final executors and retain complete control over the deployed processes.

Conclusion

By enhancing payment processing, regulatory compliance, and anti-money laundering, and creating efficiencies for customer service and fraud detection, AI use-cases in payments have the potential to significantly benefit financial consumers and market participants. Evolving financial institutions rely on the technological edge introduced with the advent of AI in their existing processes and to create new competitive opportunities. These AI-run processes are now seen as critical in their advancement of real-time fraud detection in payment processing, executing smart contracts that release digital currencies upon the automatic approval of preset conditions, and in identifying funds linked with anti-money laundering targets. The implementation of AI in payments simultaneously creates new difficulties and has the potential to exacerbate already existing market risks, both financial and non-financial. To encourage and support the use of responsible AI without stifling innovation, emerging risks from the deployment of AI techniques must be identified and mitigated (OECD, 2021).

Despite the fact that we have already covered some of the risks in-depth and offered potential risk mitigation techniques, it is still crucial to take a step back and consider the bigger picture. The following could serve as a general directive or list of recommendations for companies, policymakers, and decision makers on how to improve their current arsenal of defenses against risks caused by, or exacerbated by, the use of AI:

- Increase the policy emphasis on better data governance by financial institutions in an effort to strengthen consumer protection across all financial AI use-cases. Depending on how the AI model is intended to be used, this could address data quality, the suitability of the datasets used, and tools for tracking and correcting conceptual drifts.

- Financial institutions may need to exercise more caution when it comes to databases acquired from third parties, and only databases that have been given the go-ahead and are in compliance with data governance regulations ought to be allowed. Authorities may also take into consideration additional transparency requirements and opt-out options for the use of personal data.

- Along with efforts to improve the quality of the data, measures could be taken to guarantee the model's reliability in terms of avoiding biases. Two examples of best practices to reduce the risk of discrimination are appropriate sense checking of model results against baseline datasets and additional tests based on whether protected classes can be inferred from other attributes in the data.

- To improve model resilience and determine whether the model needs to be adjusted, redeveloped, or replaced, best practices around standardized procedures for continuous monitoring and validation throughout a model's lifetime may be helpful. Depending on the model's complexity and the significance of the decisions it generates, the frequency of testing and validation may need to be specified.

- When it comes to higher-value use-cases that have a significant impact on consumers, it would be appropriate to place more emphasis on the primacy of human decision-making.

- Consumers should be informed of the use of AI in the delivery of a product and the possibility of interacting with an AI system rather than a human being in order to make informed decisions between competing products.

- Investment in research could help to resolve some of the problems with explainability and unintended consequences of AI techniques given the growing technical complexity of AI.

References

Alexa Translations A.I. (n.d.). https://alexatranslations.com/alexa-translations-a-i/

Atkins, S., and Luck, K. (2021). AI for Banks – Key Ethical and Security Risks. https://blogs.law.ox.ac.uk/business-law-blog/blog/2021/09/ai-banks-key-ethical-and-security-risks

Beckstrom, J. R. (2021). Auditing Machine Learning Algorithms. International Journal of Government Auditing, 48(1), 40-41.

Brandusescu, A. for Centre for Interdisciplinary Research in Montreal, McGill University,

(2021). Artificial Intelligence Policy and Funding in Canada: Public Investments, Private Interests, https://www.mcgill.ca/centre-montreal/files/centre-montreal/ai-policy-and-funding-in-canada_executive-summary_engfra.pdf

Brighterion. (n.d.). https://brighterion.com/

Brighterion (2022). Explainable AI: from black box to transparency, https://brighterion.com/explainable-ai-from-black-box-to-transparency/#

Buckley, R. P., Zetzsche, D. A., Arner, D. W., & Tang, B. W. (2021). Regulating artificial intelligence in finance: Putting the human in the loop. Sydney Law Review, The, 43(1), 43-81.

Firth-Butterfield, K. and Madzou, L. (2020). World Economic Forum. Rethinking risk and compliance for the Age of AI. https://www.weforum.org/agenda/2020/09/rethinking-risk-management-and-compliance-age-of-ai-artificial-intelligence/

Grennan, L., Kremer, A., Singla, A., & Zipparo, P. (2022). Why businesses need explainable AI–and how to deliver it. https://www.mckinsey.com/capabilities/quantumblack/our-insights/why-businesses-need-explainable-ai-and-how-to-deliver-it

Hamid, S., & Paturi, P. (2021). AI Solutions for Digital ID Verification: An overview of machine learning (ML) technologies used in Digital ID verification systems. https://payments.ca/ai-solutions-digital-id-verification-overview-machine-learning-ml-technologies-used-digital-id

Henry-Nickie, M. (2019). How artificial intelligence affects financial consumers. https://www.brookings.edu/research/how-artificial-intelligence-affects-financial-consumers/

Hintze, J. (2021). Data Breach Costs Can Run into the Millions. Can Artificial Intelligence Act as a Risk Management Bulwark? https://riskandinsurance.com/data-breach-costs-can-run-into-the-millions-can-artificial-intelligence-act-as-a-risk-management-bulwark/

Hornuf, L., & Mangold, S. (2022). Digital dark nudges. In Diginomics Research Perspectives (pp. 89-104). Springer, Cham. https://doi.org/10.1007/978-3-031-04063-4_5

IBM. (n.d.). Explainable AI. https://www.ibm.com/watson/explainable-ai

IBM. (2022). Artificial Intelligence is Transforming the Financial Services Sector, https://www.ibm.com/blogs/ibm-canada/2022/02/artificial-intelligence-is-transforming-the-financial-services-sector/

IDC and Microsoft Canada (2020). AI in Canadian Financial Services: Trends, Challenges, and Successes, https://info.microsoft.com/rs/157-GQE-382/images/EN-CNTNT-Whitepaper-IDCStudyonAIinCanadianFinancialServicesTrendsChallengesandSuccesses-SRGCM3627.pdf

Islam, I., Munim, K. M., Islam, M. N., & Karim, M. M. (2019, December). A proposed secure mobile money transfer system for SME in Bangladesh: An industry 4.0 perspective. In 2019 International Conference on Sustainable Technologies for Industry 4.0 (STI) (pp. 1-6). IEEE.

Knight, W. (2019). The Apple Card Didn’t ‘See’ Gender–and That’s the Problem. https://www.wired.com/story/the-apple-card-didnt-see-genderand-thats-the-problem/

Kurshan, E., Chen, J., Storchan, V., & Shen, H. (2021, November). On the current and emerging challenges of developing fair and ethical AI solutions in financial services. In Proceedings of the Second ACM International Conference on AI in Finance (pp. 1-8).

Lee, N. T., Resnick, P., & Barton, G. (2019). Algorithmic bias detection and mitigation: Best practices and policies to reduce consumer harms. Brookings Institute: Washington, DC, USA.

Linden, A. (2022). Is Synthetic Data the Future of AI? https://www.gartner.com/en/newsroom/press-releases/2022-06-22-is-synthetic-data-the-future-of-ai

MAS, Monetary Authority of Singapore. (2018). Principles to Promote Fairness, Ethics, Accountability and Transparency (FEAT) in the Use of Artificial Intelligence and Data Analytics in Singapore’s Financial Sector. https://www.mas.gov.sg/~/media/MAS/News%20and%20Publications/Monographs%20and%20Information%20Papers/FEAT%20Principles%20Final.pdf

McNamee, J. (2022). Security and data privacy concerns motivate digital bank consumers to embrace new authentication technologies. https://www.businessinsider.com/data-privacy-concerns-prompt-new-authentication-technology-2022-6

Mintz, S. (2020). Ethical AI is Built On Transparency, Accountability and Trust. https://www.corporatecomplianceinsights.com/ethical-use-artificial-intelligence/

Moneythor. (2021). AI Ethics And Their Application to Digital Banking. https://www.moneythor.com/2021/08/31/ai-ethics-and-their-application-to-digital-banking/

Morgan, L. (2021). Synthetic data for machine learning combats privacy, bias issues. https://www.techtarget.com/searchenterpriseai/feature/Synthetic-data-for-machine-learning-combats-privacy-bias-issues

OAG (Office of the Accountability General) Norway, Supreme Audit Institutions of Finland, Germany, the Netherlands, Norway and the UK. (2020). Auditing Machine Learning Algorithms: A white paper for public auditors. https://www.auditingalgorithms.net/

OECD. (2021). Artificial Intelligence, Machine Learning and Big Data in Finance: Opportunities, Challenges, and Implications for Policy Makers. https://www.oecd.org/finance/artificial-intelligence-machine-learning-big-data-in-finance.htm.

OSTP (Office of Science and Technology Policy), White House of the United States of America. (2022). Blueprint for an AI Bill of Rights: A Vision for Protecting Our Civil Liberties in the Algorithmic Age. https://www.whitehouse.gov/ostp/news-updates/2022/10/04/blueprint-for-an-ai-bill-of-rightsa-vision-for-protecting-our-civil-rights-in-the-algorithmic-age/

Prenio, J., & Yong, J. (2021). Humans keeping AI in check–emerging regulatory expectations in the financial sector. FSI Insights on policy implementation No 35. BIS. Bank for International Settlements, 35.

Rossi, F., & Duigenan, J. (2021). IBM: The Future of Finance Is Trustworthy AI. https://www.paymentsjournal.com/the-future-of-finance-is-trustworthy-ai/

Sardjono, W., Priatna, W., & Tohir, M. (2021). ARTIFICIAL INTELLIGENCE AS THE CATALYST OF DIGITAL PAYMENTS IN THE REVOLUTION INDUSTRY 4.0. ICIC express letters. Part B, Applications: an international journal of research and surveys, 12(9), 857-865.

Scanlon, L. (2021). The challenges with data and AI in UK financial services. https://www.pinsentmasons.com/out-law/analysis/the-challenges-with-data-and-ai-in-uk-financial-services

Schwab, K. (2017). The fourth industrial revolution. Currency.

Schwartz, O. (2019). In 2016, Microsoft’s Racist Chatbot Revealed the Dangers of Online Conversation: The bot learned language from people on Twitter–but it also learned values. https://spectrum.ieee.org/in-2016-microsofts-racist-chatbot-revealed-the-dangers-of-online-conversation

Simpson, M. (2019). MindBridge Partnering with Bank of Canada to Detect Payment Fraud, http://www.canada.ai/posts/mindbridge-partnering-with-bank-of-canada-to-detect-payment-fraud

So, K. (2020). Data Quality: Garbage In, Garbage Out. https://towardsdatascience.com/data-quality-garbage-in-garbage-out-df727030c5eb

Somani, M. (n.d.). An In-Depth Guide To Help You Start Auditing Your AI Models. https://censius.ai/blogs/ai-audit-guide

Stezycki, P. (2021). Using AI in Finance? Consider These Four Ethical Challenges. https://www.netguru.com/blog/ai-in-finance-ethical-challenges

Stone, M. (2022). Cost of a Data Breach: Banking and Finance. https://securityintelligence.com/articles/cost-data-breach-banking/

Surkov, A., Srinivas, V., & Gregorie, J. (2022). Unleashing the power of machine learning models in banking through explainable artificial intelligence (XAI). https://www2.deloitte.com/us/en/insights/industry/financial-services/explainable-ai-in-banking.html

Thomas, W. (2021). Where enterprise manageability can boost your business. https://www.itpro.com/hardware/368391/where-enterprise-manageability-can-boost-your-business/

UK Finance and KPMG (2020). Ethical principles for advanced analytics and artificial intelligence in financial services. https://assets.kpmg/content/dam/kpmg/uk/pdf/2020/12/ethical-principles-for-advanced-analytics-and-artificial-intelligence-in-financial-services-december-2020.pdf

von Eschenbach, W.J. (2021). Transparency and the Black Box Problem: Why We Do Not Trust AI. Philos. Technol. 34, 1607–1622. https://doi.org/10.1007/s13347-021-00477-0

White House. (2022). Blueprint for an AI Bill of Rights. https://www.whitehouse.gov/ostp/ai-bill-of-rights/

Yalcin, O. G. (2021). Black-Box and White-Box Models Towards Explainable AI. https://towardsdatascience.com/black-box-and-white-box-models-towards-explainable-ai-172d45bfc512

Authors

Anita Smirnova

As an Economist at Payments Canada, Anita Smirnova conducts quantitative research projects to provide thought economic leadership within the Payments Ecosystem, internally and externally. In her role, Anita monitors domestic and international payment systems developments and conveys findings on emerging issues that may impact the health of the Canadian economy. She is also responsible for analyzing payments data and generating insights for Payments Canada’s member financial institutions. The main areas of research include payments modernization, overnight loan market activity, nowcasting, and payment economics.

Tarush Gupta

As an Associate Quantitative Analyst at Payments Canada, Tarush Gupta is responsible for analyzing data from the national payments clearing systems and undertaking research that supports payment modernization initiatives. Previously, he developed econometric and machine learning models to stress-test the intraday liquidity in the high-value payments system along with forecasts of its performance in foreseeable macroeconomic scenarios. Tarush holds a BA and an MA in Economics, and a Master of Management Analytics from the University of Toronto.